What is Generative AI?

-

Sep 06, 202415 min read

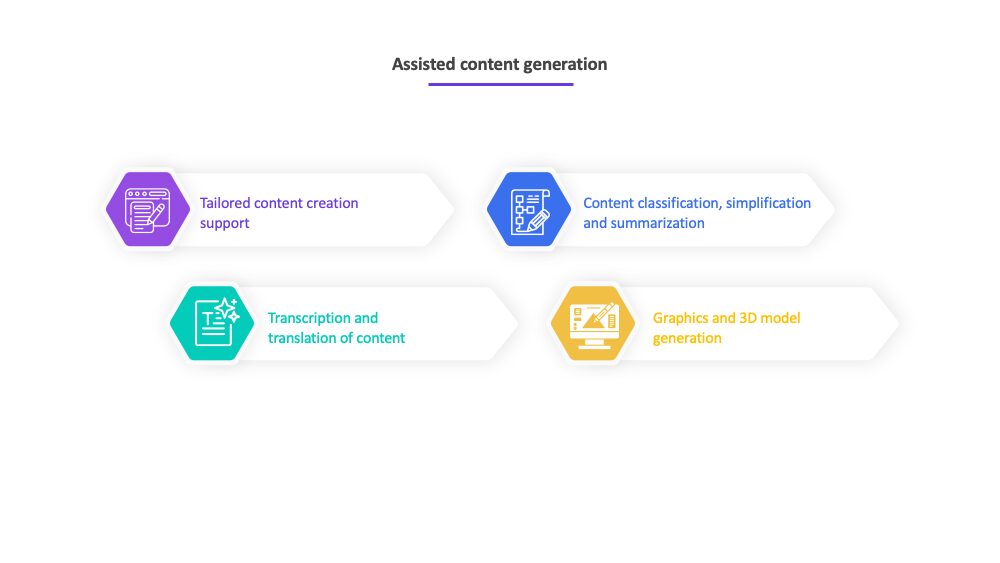

Generative AI (GenAI) can:

Generate new and original content.

Learn patterns from unstructured data like documents, images, music.

Generate/ transform/ summarize text, images, music, videos, 3D renderings.

Be easily accessed right out of the box, which makes GenAI different from all AI that came before it.

Typically, a GenAI model goes through three main phases:

To understand underlying structure and features, each GenAI model uses large datasets on which to train. For example, a GenAI model trained on a dataset of books learns about chapters, paragraphs and sentences.

Once trained, GenAI models can generate new content that’s similar but not identical to its training data. This means GenAI can create new images, compose music, write stories, or generate other types of data.

During their operational phase, GenAI models can also learn from the queries and inputs they receive. This typically requires added mechanisms beyond the initial data training phase. Continuous learning from user inputs raises privacy and security concerns.

After their training on a large dataset, operational GenAI models can be fine-tuned with additional data. This data may include specific queries or inputs received during the GenAI’s operational use phase. This fine-tuning enables the model to adapt with more specific contexts and improves performance in specific areas:

Some GenAI models use reinforcement learning from human feedback (RLHF). This approach has the model receive feedback on its historical outputs, which it then uses to improve its performance going forward. This kind of feedback loop helps the GenAI model to ‘learn’ from interactions with its users.

Active learning is another way GenAI models can refine themselves. This is where the model is able to query its user prompts for clarification or more information. Doing so helps the GenAI model to improve its ‘understanding’ and its capabilities to deliver outputs that are based on new inputs.

Updating the model incrementally, as new data or queries are input, allows the model to adapt continuously, learning with time and ‘experience’ without the need to re-train from the beginning.

User preferences and interactions with GenAI models can be used by the AI to better tailor its outputs. A text generation model, for example, could ‘learn’ to mimic a specific user’s preferred topics or style of writing after numerous inputs made over time. When learning from users’ inputs in this way, it’s important to note potential issues and risk mitigation might be necessary from the model’s developer.

With the advent of GenAI comes heightened expectations both in the capabilities of the AI itself and in every aspect of customer engagement, including faster and better experiences, universal accessibility, and 24/7 availability. There also arise considerable safety and security concerns, both in terms of data privacy and intellectual property, as well as the decisions AI may make based on potentially inaccurate data.

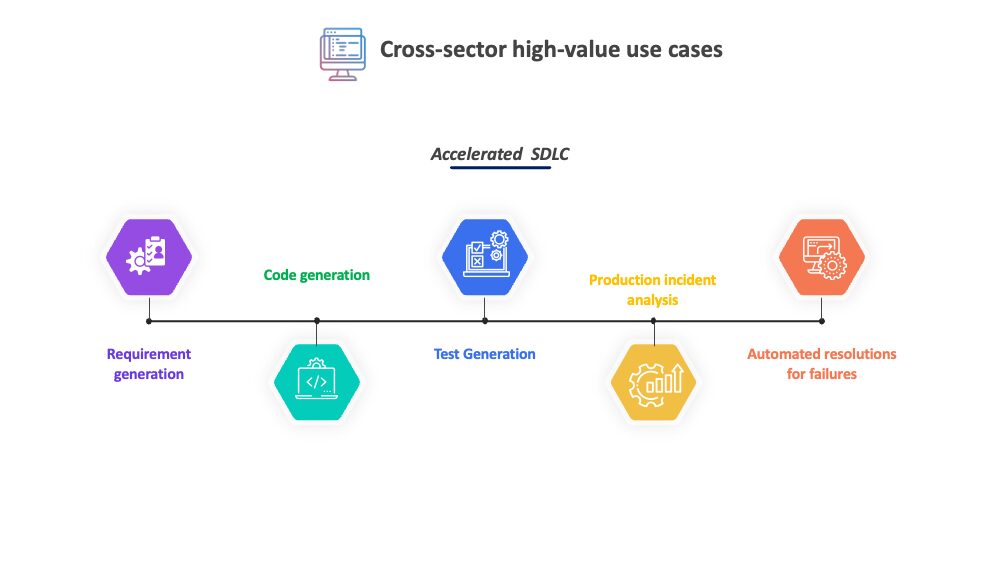

Across all sectors, the impact of GenAI is evident throughout the software development lifecycle. In software development and software testing particularly, GenAI is a growing influence. GenAI is evident in digital Quality Engineering best practices and AI data annotation and tagging has also emerged. In all likelihood, your organization is probably already working with GenAI applications. As a consequence of GenAI, software engineers and software testers particularly have witnessed an evolution of their software testing jobs.

Read about how Generative AI can be leveraged to re-define non-functional requirements gathering.

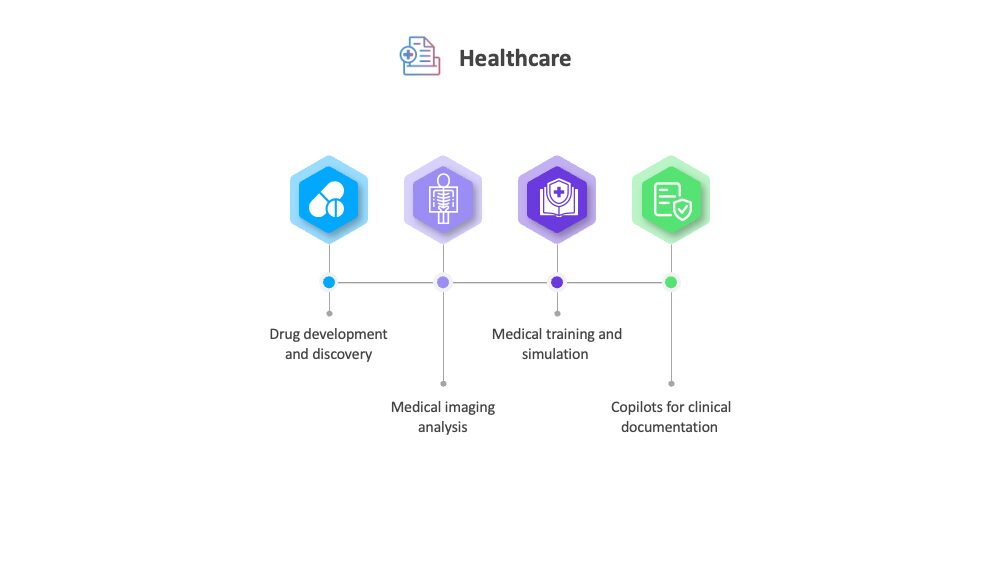

Read more about it: AI-Enabled Healthcare – How Quality Engineering Needs to Evolve to Meet New Challenges

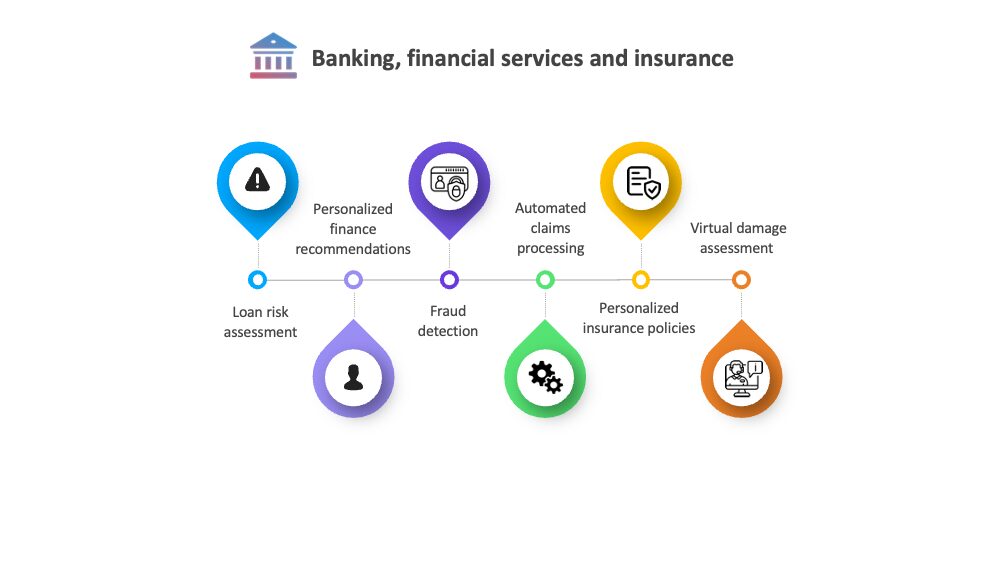

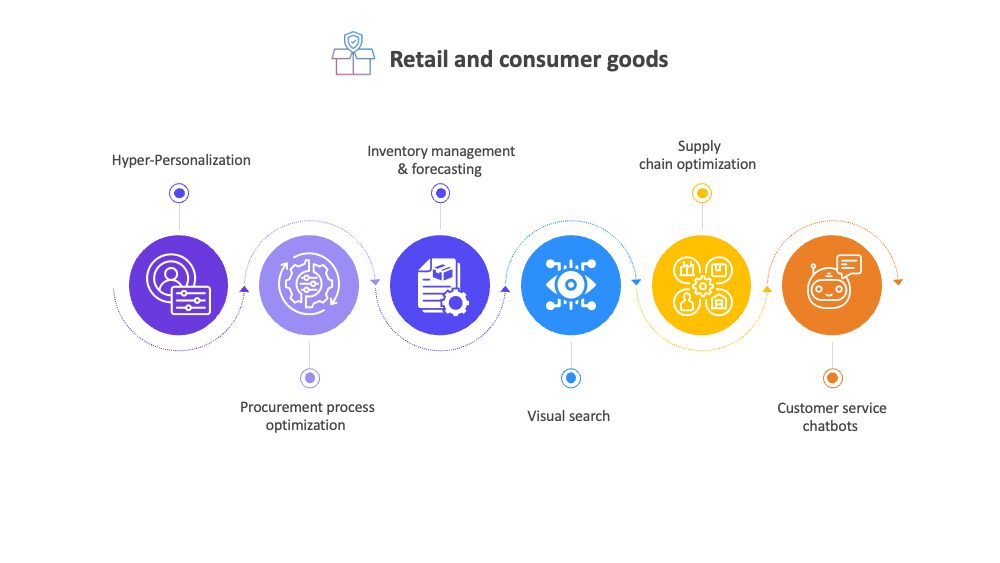

Read more about it: Unveiling the Retail Revolution: How Business AI is Redefining the Retail and Consumer Goods Landscape

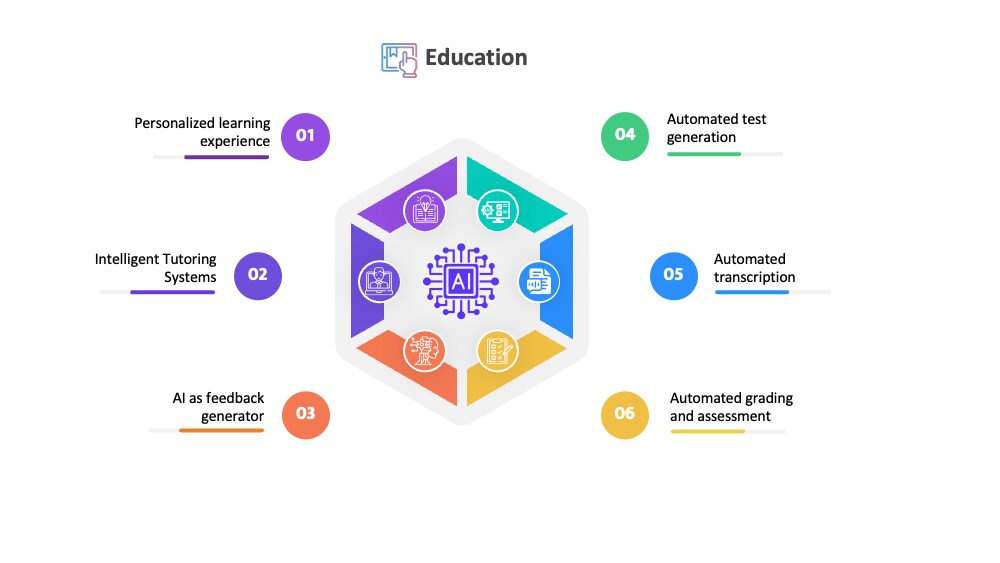

Read more about it: AI-Driven Predictive Models Improve Student Enrolment, Retention and Logistics for a Leading University

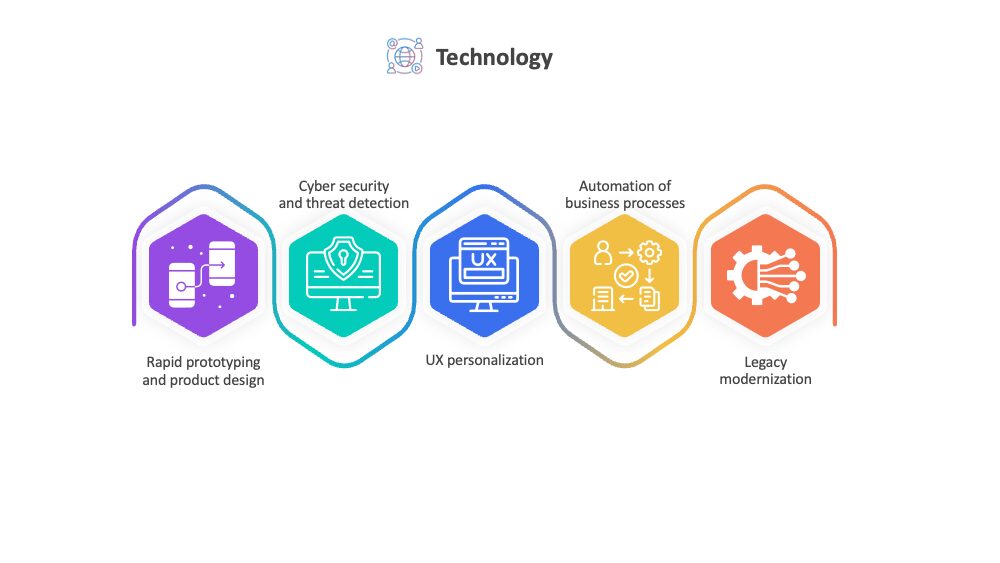

Read more about it: Leading Tech Company Ensures AR/VR Headset Inclusivity in Diverse Real-World Home Environments and Tech Innovator Successfully Captures Diverse Speech for Next-Gen NLP Model Training

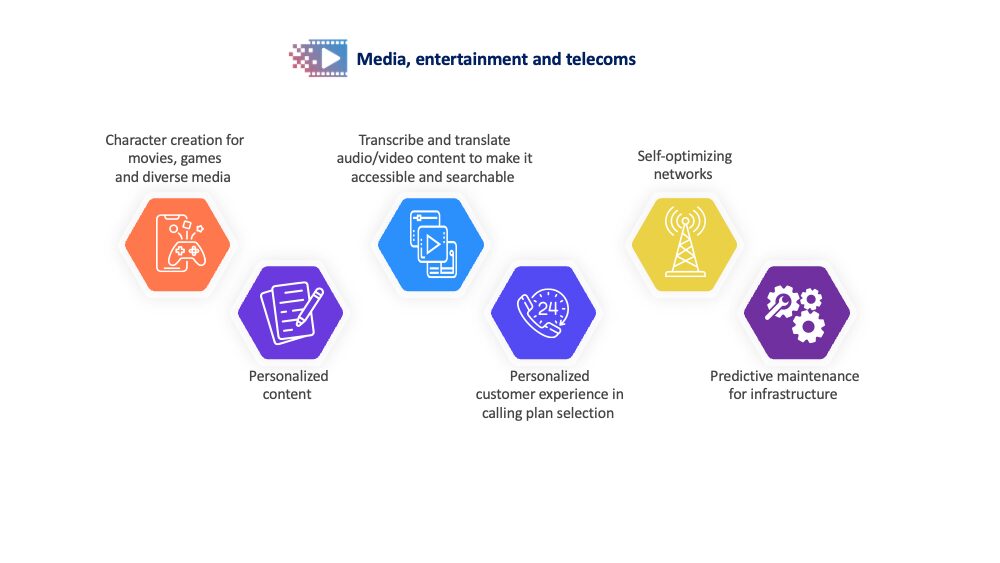

Read more about it: How AI, Predictive Analytics and Development Testing Services Can Fix Telcos’ Churn Problem.

While the use of GenAI models such as ChatGPT might cause some concern, AI technologies are both scary and exciting. As ChatGPT itself says:

“As an AI language model, ChatGPT is a tool that can be used for both positive and negative purposes. It is important to recognize that while it has the potential to revolutionize the way we interact with technology and each other, it also has limitations and ethical considerations. Whether we fear or embrace ChatGPT depends on how it is developed, deployed, and used”.

ChatGPT

While generative AI represents a powerful and transformative technology with a wide range of applications, any form of global legislative framework remains embryonic. GenAI therefore comes with responsibilities and ethical considerations that need to be addressed. Technology leaders are also particularly worried about the nondeterministic behavior and hallucinations of large language models (LLMs) — where the AI generates plausible but incorrect outputs. Additionally, there are concerns about whether GenAI outputs align with an organization’s culture, ethics, policies, and user experience standards.

GenAI is shaking things up in the enterprise world but businesses operating GenAI models must ensure that content generated is of high quality and meets the desired criteria.

The implementation of continuous learning can be particularly technically challenging, and it requires robust AI Quality Engineering to handle dynamic updates and still ensure model stability. Relying solely on human oversight to manage these outputs is unrealistic and costly, especially for AI systems that interact directly with customers.

Read AI Engines in Software Development: A Boon or a Bane? and Self-Healing Data Models: The Future of Resilient AI Systems.

To eliminate bias in the generated content, it is crucial that the training phase addresses biases in the data. As the GenAI model learns from new inputs, it risks introducing new biases or unethical patterns. Continuous monitoring and ethical guidelines are necessary to mitigate these risks. Read how Qualitest helped a social media giant eliminate bias within the ground truth data for its next-gen VR headset and The Science of Human Data Collection.

Managing the rights and ownership of GenAI-generated content is a complex and evolving area of law. Legal and compliance leaders should keep a keen eye on any changes to copyright law that apply to GenAI output and require users to scrutinize any output generated to ensure it does not infringe on copyright or intellectual property rights. Read more about demystifying Compliance Risks: A Guide to Navigating ChatGPT Safely

An ongoing GenAI challenge is how to prevent its usage for maliciousness such as for creating deepfakes or spreading ‘fake news’ and misinformation.

For more information on GenAI intellectual property and the battle against GenAI misuse, read: Demystifying Compliance Risks: A Guide to Navigating ChatGPT Safely.

GenAI’s capacity for continuous learning from user inputs undoubtedly raises privacy and security concerns. Security in GenAI is a major concern for businesses because GenAI has the potential to be a huge new attack surface for large enterprises particularly. Ensuring that AI user data is handled responsibly and securely is critical to successful usage of GenAI applications.

The focus of the Core Security Principles for GenAI is on ensuring the ethical, safe, and secure deployment of this technology. The principles include:

Adherence to these principles is critical to foster trust and minimize risks associated with GenAI.

GenAI models face common threats and vulnerabilities that can undermine their integrity and security.

Included in these are:

In addition, biases in training data can lead to discrimination in outputs, while insufficient oversight can result in unintentional generation of harmful or inappropriate output content. Addressing these threats requires stringent and robust security measures with continuous monitoring.

To secure GenAI models requires a comprehensive approach that draws on best practices from cybersecurity and AI ethics. Best practices include:

It is also critical to have explainability features that foster transparent and understandable model outputs. Access controls and encryption should be implemented to protect sensitive GenAI model data. Updating models regularly and monitoring performance helps to identify and to mitigate threats as they emerge, which ensures that GenAI models remain secure and reliable.

Securing GenAI systems carries ethical challenges, issues related to bias, privacy, accountability, and the potential misuse of AI-generated content. Achieving fairness is vital, because biased training data leads to discrimination in outcomes, which impacts marginalized communities disproportionately.

The risk of sensitive data being inadvertently generated or inferred by the AI leads to privacy concerns. Another major challenge is accountability, because the determination of responsibility for actions and decisions made by GenAI models can be complex. The potential misuse of AI-generated content, like deepfakes and misinformation, poses serious ethical dilemmas. This necessitates the most robust security measures and effective ethical frameworks that prevent harm and promote the responsible use of GenAI.

AI plays a vital role to strengthen cybersecurity defenses by improving the ability to detect, prevent, and respond to threats more effectively. By leveraging machine learning algorithms, AI can analyze vast amounts of data in real-time to identify patterns that are indicative of cyber attacks, like anomalies in network traffic or unusual user behavior.

AI-driven systems can automate threat detection and response, which can reduce the time taken to mitigate potential breaches and minimize human error. AI can also help develop predictive models that can anticipate future attacks. This enables proactive defense measures by continuously learning and adapting to new threats. Overall, AI significantly bolsters the resilience and efficacy of cybersecurity infrastructures.

In summary, generative AI models can learn from queries and inputs during their operational phase, enhancing their ability to generate relevant and high-quality content. This learning process involves various techniques and must be managed carefully to address privacy, bias, and technical challenges.

For more information on GenAI security considerations, read: Generative AI – a New Huge Attack Surface for Enterprises? and Is Your Ground Truth Data’s Privacy and Security at Risk?

Qualitest is the world’s leading managed services provider of AI-led quality engineering solutions. We help brands transition through the digital assurance journey and make the move from conventional functional testing to adopt innovations such as automation, AI, blockchain, and XR.

Using these AI-powered solutions and solutions for AI apps, we have: helped a leading tech company ensure its VR headset captured full body motion data, complete egocentric data and data inclusivity in diverse real-world environments; another tech innovator to capture diverse speech to train its next-gen natural language processing model; a university to use AI predictive modeling to improve its core business metrics, and a social media corporation to improve speech recognition data accuracy.

Qualitest has a long history of using AI in testing and the testing of AI, having built up extensive experience, expertise and strong propositions for both. In the recent Forrester Wave™ report Qualitest received the highest score possible in the testing of AIIA (AI-infused applications) and genAI criteria. The Forrester Wave™️: Continuous Automation and Testing Services, Q2 2024 report recommends, “Firms already delivering AI and GenAI should consider Qualitest for AI-related testing services.”

Our specialized testing services safeguard your AI applications against errors, biases, and data issues that could impact your business. Our expert data scientists provide comprehensive testing to ensure a smooth, successful AI rollout and protect your reputation.

With Generative AI becoming more accessible, organizations are quickly adopting the technology to deliver new services, conquer new markets and optimize business processes. But applications infused with GenAI carry unique challenges and risks. Before you deploy, you need specialized Quality Engineering from a team of experts — like ours.

No matter how effective they’ve been before, old testing methods won’t work with unpredictable, non-rules-based AI/ML systems. To uncover the data issues, errors and biases that can harm your reputation and your business, you need new expertise. Our data scientists-in-test are specially trained to derisk your AI rollout.

Qualisense Test.Predictor is our new AI-powered tool that dramatically improves risk-based testing strategies. It uses AI and automation to speed up time to release, cut costs and redeploy resources to focus on what matters most.

Leap forward with product deployment by leveraging our AI Data Services for AI/ML enablement. Harness our expertise in delivering various types of real-world and synthetic data including AI training data at scale globally.

Take your raw business data to the forefront of innovation with an advanced AI development platform that’s already launched some of the most incredible AI technologies in existence right through to commercially operational applications.

In Qualitools, we have prepared an open ecosystem of dynamic AI-enabled, API enabled tools that are technology agnostic, vendor independent and deployable to anticipate and solve diverse enterprise transformation challenges.

The accelerated pace of Artificial Intelligence (AI) is driving disruptive change in enterprise operations. In our blog on the future of AI, we examine the natural evolution of AI, focusing on OpenAI and open-source models. Understanding the collaborative nature of these frameworks and the unique advantages of OpenAI’s tools is essential for C-suite leaders aspiring to drive innovation and maintain a competitive edge. Read our blog: The Future of AI is Open: Adopt it Now to Be Future-Ready

Ready to get off the sidelines and into the GenAI game? Book a strategy session with our GenAI experts.