What is ETL testing?

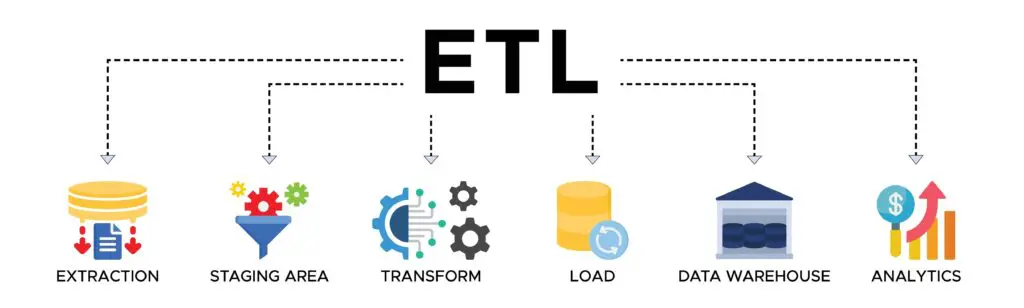

ETL refers to Extract, Transform, and Load. ETL testing is a data-centric validation process essential for enterprises reliant on accurate data migration. This process verifies each stage —extraction, transformation, and loading – ensuring seamless data transfer from source to target systems while maintaining data accuracy, integrity, and consistency. As a cornerstone of data-driven decision-making, ETL testing supports effective analytics and reliable reporting.

Essential objectives of ETL testing

ETL testing ensures data quality throughout the migration process, guaranteeing that transformations are accurate, complete, and seamlessly integrated. This approach enhances data reliability and minimizes operational disruptions by identifying issues such as data duplication, missing records, transformation errors, and load failures. Through comprehensive data validation and reconciliation at each stage, the data is perfectly aligned with critical business rules and is ready for effective analytics and reporting.

How does the ETL testing process work?

Extract: Data is gathered from various sources-such as databases, APIs, and files-that may have different formats. This consolidation is essential for consistent data handling across systems.

Transform: The extracted data is then transformed to meet specific business needs. This stage includes cleansing, joining, filtering, and reformatting data to ensure it aligns with business rules and maintains reliability. Data transformation testing is crucial here, checking each transformation step for accuracy.

Load: Finally, the transformed data is loaded into a target system, like a data warehouse, making it ready for analytics. ETL testing at this stage safeguards data integrity, preventing duplication and ensuring error-free loading.

Types of ETL testing

To elevate data assurance quality, various ETL testing methods validate distinct aspects of the ETL process:

Data completeness testing

Ensures all source data is fully loaded, with no missing values, a critical component for comprehensive data analysis.

Data transformation testing

Validates transformations, such as data mapping or type conversions, are executed accurately to maintain data consistency.

Data quality testing

Detects data anomalies, duplicates, and other quality issues, ensuring only high-quality data flows through the ETL pipeline.

Data integrity testing

Examines relationships and dependencies within the data, maintaining referential integrity and preventing corruption.

Data load testing

Simulates high data volumes to test ETL performance under peak loads, identifying bottlenecks and optimizing performance.

Data integration testing

Verifies accurate data flow and synchronization across systems, preventing compatibility issues in the pipeline.

Data reconciliation testing

Ensures data consistency by comparing source and target records, which prevents misalignment or data loss.

Data retention testing

Confirms adherence to data retention policies, ensuring archived data complies with governance standards.

Error handling testing

Assesses the ETL process’s ability to handle errors effectively, focusing on how issues are logged, managed, and data integrity is preserved.

Key benefits of ETL testing

- Data accuracy

- Enhanced data quality

- Business decision support

- Regulatory compliance

- Operational efficiency

- Risk mitigation